Innovation

API Monolith Migration: Part 1

Laura Barber, Senior Site Reliability Engineer

While at Peloton we’re always moving forward, we like to also take the time to look back and see the progress we’ve made from a technical perspective. One of our biggest milestones in terms of putting our technology stack to the test are our event-based live rides; Turkey Burn, our annual runs and rides for working off those U.S Thanksgiving calories, Alex Toussaint’s hosted ride celebrating the final two episodes of the ESPN documentary “The Last Dance”, Artist Series classes celebrating amazing artists from around the world, and more! These types of classes are the ones with our highest live attendance, often boasting tens of thousands of members taking a single class concurrently. We often spend a lot of time in advance testing and preparing for these events to ensure that every part of our stack is tip top and ready to scale!

A key part of our infrastructure is the API Monolith. This is a series of interconnected services that serve almost all of our public customer-facing API endpoints. Back in 2019 the Monolith was running entirely on EC2 instances in AWS, provisioned via Terraform and configured using Chef. While day to day usage was handled appropriately, we encountered a ton of pain points around exceptional large events. The process and efforts to scale were significant, manual, and failure prone. Additionally, we were looking for a more flexible deployment strategy, immutable images, improved observability, and improved developer experience.

With this in mind, in mid 2019 we kicked off an initiative to migrate the Monolith to be run entirely on Kubernetes. With the most recent large live ride milestone, Turkey Burn, held on Thanksgiving, 2021, we officially completed that effort by having the class series served entirely by Kubernetes.

It was not without challenges, which brings us to the point of this blog! This is written from the perspective of the Core Engineering Compute Team, part of Peloton’s Platform organization. We were responsible for the entire Kubernetes setup, managing clusters, additional tooling, providing guidance for the migration, and lots of troubleshooting!

Dual Stack

While we began planning the migration with an optimistic timeline, we quickly realized that this wouldn’t be a simple lift and shift. We needed to ensure that we had a mechanism that would allow us to route a percentage of traffic to deployments on either EC2 or Kubernetes easily. We relied heavily on Cloudflare for this and set up a simple weighted load balancer with one origin pointing to EC2 and the other to Kubernetes (fronted using Nginx Ingress Controller). We leveraged this frequently and our traffic weight became the marker of progress on the migration effort.

Rollout Plan

Now that we had the mechanism to control the rollout, we needed to decide how we would approach it. We didn’t call this the API monolith for nothing! There are a ton of services and interdependencies involved here, and we were quick to identify that we couldn’t do the entire migration in one lift; we had to roll out services in smaller groups. There was already a pretty logical breakdown of services in EC2, some corresponding to their own AWS Autoscaling Groups (ASGs). So we began with this and reduced it to a few smaller service milestones.

Below we have a graph showing a selection of some of the services we migrated and the number of days it took to migrate them (from 0% to 100%). Note that this is not a 1:1 service mapping but instead logical groups of services that we focused migration efforts on.

API Core represents the Monolith “core” services, providing endpoints for client interaction.

CMS represents endpoints relating to customer management, used by our internal staff.

Scourgify is an internal framework of management scripts intended for ad-hoc use.

Cronjobs are somewhat self explanatory but were a huge part of our EC2 Monolith management and definitely couldn’t be left behind!

A bar graph showing a selection of some of the services Peloton migrated and the number of days it took to migrate them (from 0% to 100%).

As you can see, most of the smaller services (above) were less involved or only internally facing so we were able to move these faster and with greater efficiency. However, for the API Core service (below), which was both large and complicated, we took much longer and ran into quite a few bumps in the road.

A bar graph showing the API Core migration and the number of days it took to migrate.

Overall, we started the project with API Core services and migrated the other smaller services at various points along the entire Monolith migration effort.

A graph showing the length of time it took Peloton to migrate services.

Issues

No rollout is perfect, and ours was no exception. Considering the number of moving parts we did well in the end, however it was certainly stressful at the time. We’d like to highlight a few of the main problems we ran across and how we solved them.

Scaling

Peloton has some interesting scaling challenges: we have a fairly consistent load with some anticipated extra large events throughout the year –or so we thought. When the COVID-19 pandemic hit, we saw explosive growth which impacted our migration efforts significantly. Classes suddenly had larger than expected attendance, we had increased scrutiny on successful load tests, and we had to communicate all of this amongst multiple teams while everyone was working remotely! With projected class sizes increasing rapidly we were suddenly looking at a scale we thought we wouldn’t be hitting for quite some time. While a challenge, this helped us analyze and discover several issues.

Deployment Speed

As previously mentioned, one of our pain points with the Monolith running on EC2 was slow and flakey deployments. The API Core project and its components previously ran on a series of ASGs backed by EC2 instances provisioned by Chef. Chef deployments could take anywhere from 10-30 minutes and frequently failed in an opaque fashion. With developers not able to clearly identify why the deployment failed and the knowledge that a redeploy could take another significant chunk of time this was not a scalable solution.

Moving onto Kubernetes, the teams working with the Monolith knew they wanted a quicker, more reliable, and easier to understand deployment process. The Compute team was, of course, confident that we could enable this! But naturally it wasn’t that easy. During some of the initial migration efforts, we were seeing total deployment (100% of pods updated and running) turnaround time at upwards of 40 minutes; a step backwards in deployment time.

Too Many Pods

In early July 2020 we were anticipating the entire API Core needing 13,000-26,000 pods for a successful 100k rider load test, with a single service needing over 10,000 pods. With this many pods we would end up running over 3,000 large EC2 nodes as workers.

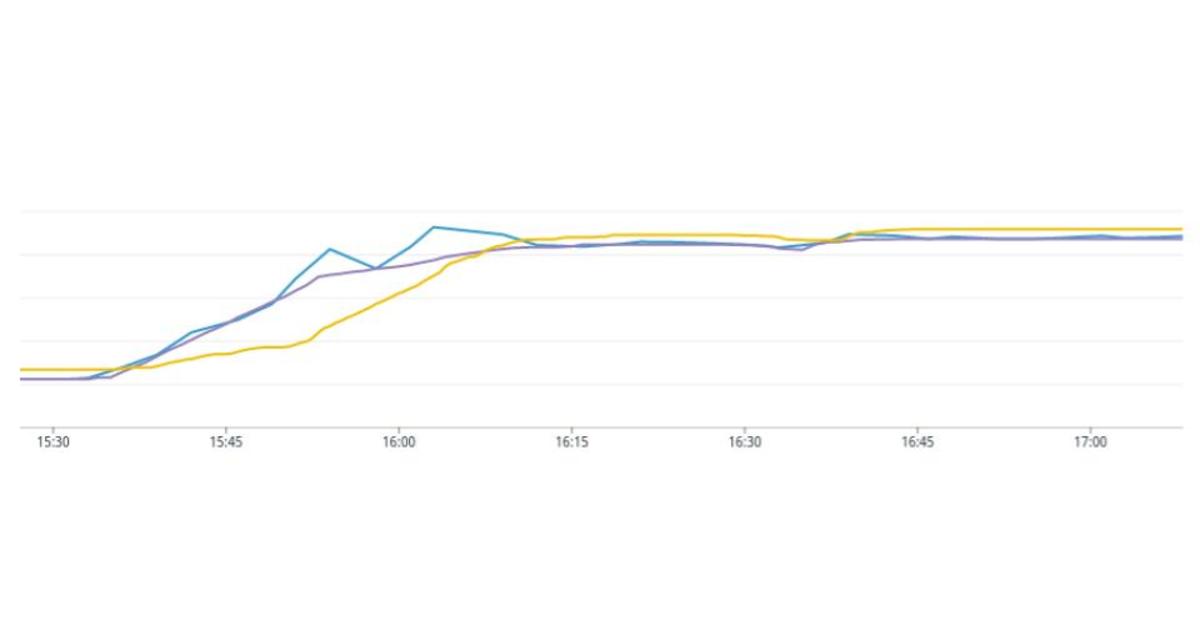

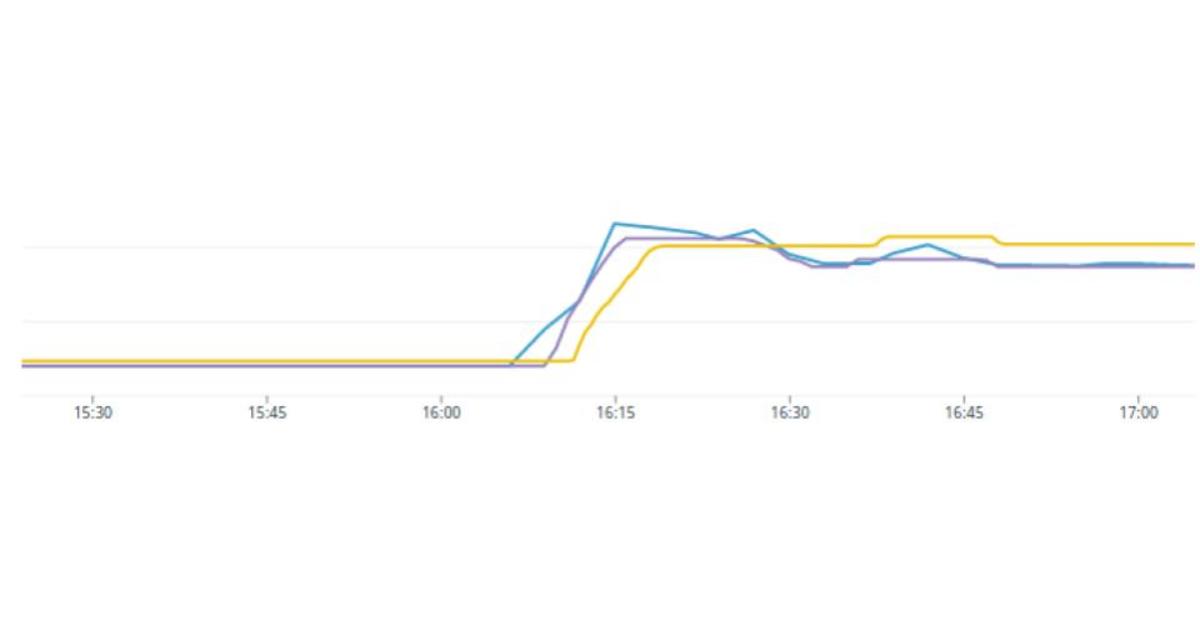

It probably comes to no surprise that we started experiencing issues very quickly. Requiring so many nodes contributed to our slow deployment speeds, so we began work trying to identify where the slowdown was. We did some analysis on node launch vs node ready states, and learned that it was taking the nodes a significant time (5-10 minutes) to announce that they were ready, and begin scheduling workloads. This was due to the fact that a node does not enter a Ready state until the CNI reports that everything is good to go. We had encountered a race condition where the CNI pods would be stuck on fetching the iptables lock and subsequently cause the Readiness probe to fail. This was later solved with CNI v1.7.2 and resulted in the following improvement to node responsiveness.

Two line graphs that show the before and after.

We also tried various small tweaks, such as changing maxUnavailable/maxSurge numbers (ranging from 0 -> 100%) to see if that would reduce deployment times. The default rollout strategy was only rolling out pods a few at a time. A deployment size of 10,000 would take hours. By increasing maxUnavailable we stopped waiting for old pods to terminate, and with maxSurge we pushed the cluster to deploy pods as fast as possible. Even with these adjustments we were still peaking at a deploy rate of about 1,000 pods per minute, and even that wasn’t consistently reproducible. Unsurprisingly, since we were running at such a high pod per service rate, increasing maxUnavailable/maxSurge made things worse as we were effectively doubling the number of pods for that deployment (10,000 -> 20,000) during rollout. At this point we were seeing significantly increased control plane latency during these deployments. We could see some of this manifest in lag receiving responses when running kubectl and pods missing from the Endpoint objects, which back Services on the cluster. Upon further investigation, we discovered this error on many of our Endpoints:

Warning FailedToUpdateEndpoint 18m (x5985 over 7d3h) endpoint-controller Failed to update endpoint: etcdserver: request is too large

The end result of trying to schedule a 10,000 pod deployment was crashing the control plane. Cue panic as we brought down our entire test cluster.

While we did not initially understand the root cause of the issue, after a lot of investigation we finally discovered that we were running significantly more pods per service than the cluster could handle. The key to this was Endpoint objects: a list of all the pods for a particular Service. As of September 2020, upstream the Kubernetes Scalability SIG (Special Interest Group) showed performance degradation above 250 pods per service. Keep in mind we were trying to run 10,000.

All of the ip addresses for pods associated with a service are stored in a single Endpoint resource. As we create these Pods, each one is sending an individual update to the node’s kube-proxy daemon and then upstream to the Control Plane API Server. With this pattern you can see that the number of backend pods is going to drastically increase the size of the Endpoint object that needs to be transmitted. We determined that a rolling update of 5,000 pods would send roughly 3.75TB of data from the cluster to the control plane.

There was work in the Kubernetes project to address this issue called EndpointSlices. Unfortunately at the time, we were using EKS v1.16 and had quite a while to go until we could upgrade to v1.19 when this would first become available. With this information we could focus our efforts on reducing the number of pods for this service.

To Be Continued

It took a huge cross-team effort as well as a big chunk of time to get to this point and to fully understand the issues we were encountering. It also took a lot of words to capture it all, so in Part 2 of this blog post we’ll discuss the changes we made to achieve an appropriate number of pods, some additional challenges we encountered, and the completion of the migration project.

//End Part 1